Kyvos Debuts OLAP for Hadoop

Many technology pros view OLAP as a legacy technology, a holdover from the days of data warehousing that doesn’t have a place in today’s big data world. But several startups are fighting to change that perception, including Kyvos Insights, which today unveiled its OLAP-on-Hadoop solution.

Twenty years ago, online analytical processing (OLAP) was the center of many enterprise data warehouse (EDW) initiatives. The technology, which is largely synonymous with the term “multi-dimensional database,” gave organizations a way to pre-index and aggregate large amounts of data in such a way that analysts can interact with the “cube” in a real-time manner–slicing and dicing and drilling up and drilling down to their heart’s content.

Then Hadoop arrived on the scene, and what people thought of as big data in an EDW environment suddenly looked not so big after all. People became enamored with the prospect of using distributed processes like MapReduce to crunch insights out of really data sets measured in the tens of petabytes. This was something new, and OLAP was old, so the new thing must be better, right?

Wrong, says Kyvos Insights Vice President of Products Ajay Anand. “With Hadoop you have a cost-effective, scalable environment so you can bring in all kinds of data into Hadoop and use it as a data lake,” Anand says. “But the trouble with Hadoop is it’s hard to use and not interactive. Organizations bright data into Hadoop, but they can’t get value from it so easily.”

Many Hadoop shops today are turning to  SQL engines, such as Hive and Impala, to enable business analysts to get value out of Hadoop. But SQL should not be viewed as a panacea for the organization, warns Anand.

SQL engines, such as Hive and Impala, to enable business analysts to get value out of Hadoop. But SQL should not be viewed as a panacea for the organization, warns Anand.

“The trouble is you get a query and you have to run a SQL job on Hive, which takes time, so you’ve got slow response time,” he says. “For certain queries, Impala can take 20 to 30 minutes to return a query. Hive and Impala are running the SQL query on Hadoop using either Spark or MapReduce, but it will take time because you’re trying to process a huge amount of data to get the value from that query in real time.”

Many organizations try to get around this problem by precomputing aggregations on Hadoop. By first running MapReduce or Pig jobs against the data, they can hammer it into a higher structure, and then move it to a traditional EDW to generate reports. “Surprisingly this is true for companies like Yahoo and Facebook,” Anand says. “Yahoo would do pre-aggregations on a huge Hadoop installation, but then would be creating aggregates and moving them to SQL Server to run reports.”

There are several problems with this approach. First, moving that much data around creates lags in the cycle and increases latency. It’s also anathema to Hadoop in a couple of manners, including its “bring-the-compute-to-the-data” mantra, as well as the big data notion that data should be analyzed in as close to its natural form as possible to preserve details.

The folks at Kyvos Insights think they have come up with a solution that offers the best of both worlds. In other words, it offers the speed and interactivity of OLAP and the scalability and flexibility of Hadoop.

The software essentially builds a cube directly in on HDFS by using indexes and building aggregations of the data. Once the cube is built with all the dimensions that a customer will want to explore against, then users can fetch the data directly from the cubes.

Anand elaborates: “We give you a simple, visual way to transform the data, and then decide what dimensions and so forth you’re interested in storing,” he says. “We build the cubes, store them right there on the file system, then give you a front-end to interact with the cube, to create visualizations and so forth.” Customers can either use Kyvos’ visualization tools or they can point the visualization tools from Tableau, Qlik, or Microstrategy against the cube, via support for Microsoft‘s MDX protocol.

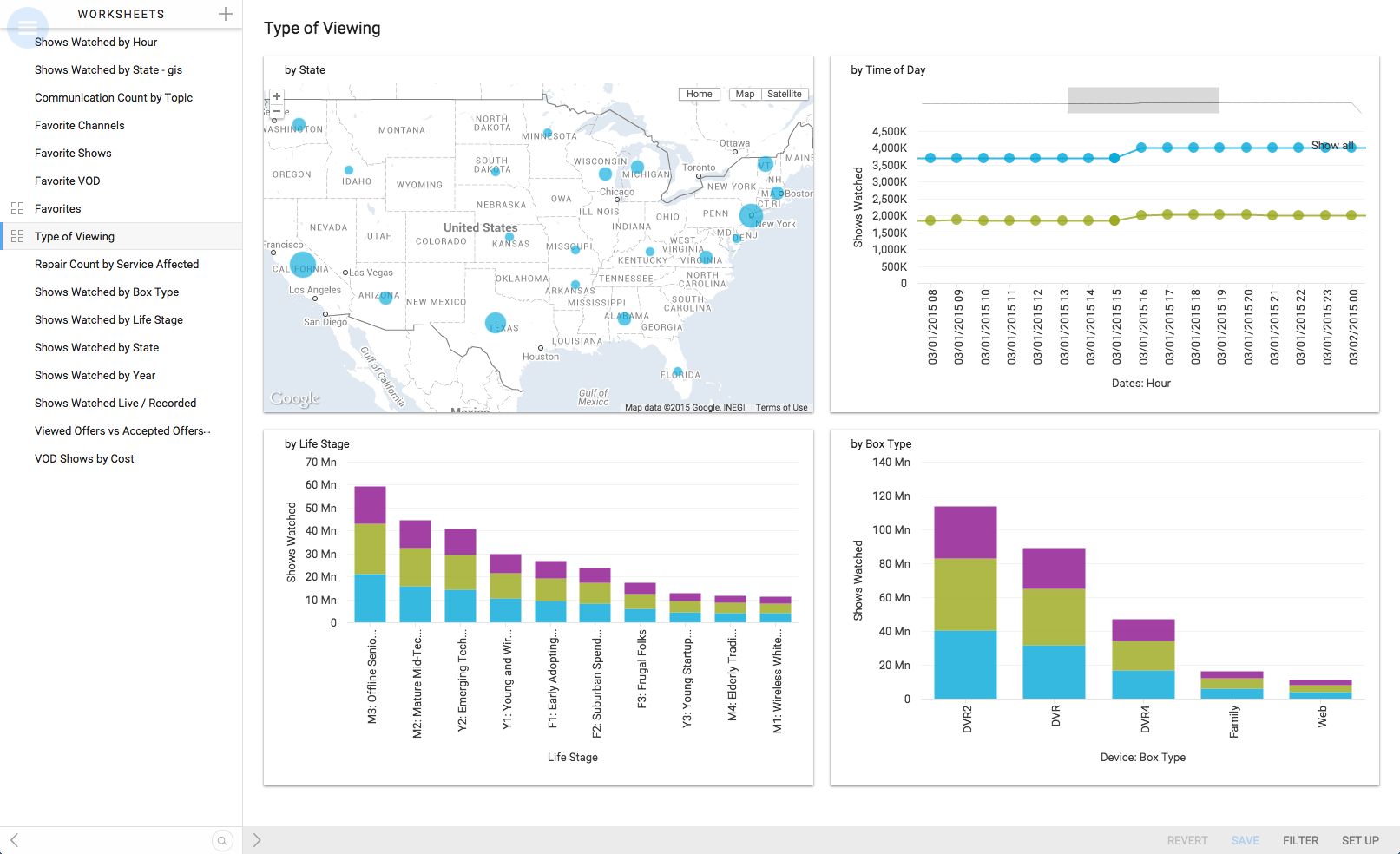

Users can interact with large amounts of highly varied data in real-time using the OLAP-on-Hadoop solution from Kyvos Insights

The cubes Kyvos can build and run on Hadoop are orders of magnitude bigger than what could be built on traditional OLAP gear. Instead of getting rid of the granular level of detail that would ordinarily be summarized or aggregated in a traditional OLAP setup, Kyvos can build a specific dimension for each column or field, whether it’s an individual customer or an individual SKU (stock keeping unit).

“If you think about Hadoop, it frees up traditional constraints, and if you take off the constraints, now you can build all that detail in the cube itself so you can drill down into really low-level details and get granular information about the data all in that OLAP construct,” he says.

That data granularity is measured by the “cardinality” of the cube. The cubes that Kyvos builds can handle 100 million to 200 million different types of data. That’s far beyond what the typical open source OLAP solution can handle, which is in the high hundreds of thousands to 1 million types.

“That’s the problem we solve, to give you the ability to deal with data sets that go to 100 million-plus cardinality,” Anand says. “And the number of rows, the transactions and so forth, can be in the hundred-billion-plus range. We have a customer in telco looking at a data set of 500 billion rows on a 96-node cluster.”

There are two things happening with data on Hadoop. One is the algorithms, Anand says. “You’ve got data scientists writing Java or Python scripts and doing machine learning to munge this data and do analysis on it. But there’s also a human element,” he says. “You want to get access to the data and get quick insights and follow a train of thought in terms of exploring that data, and that’s where OLAP comes in.”

Maybe OLAP wasn’t dead, just merely on hiatus. “There’s a reason that OLAP has existed for all these years, to give you that interactive analysis,” Anand says. “Just a SQL query is not enough and it would not be fast enough if you’re doing any kind of real-time exploration of your data.”

Kyvos is self-funded and based in Los Gatos, California. The company is working with all the Hadoop distributors, and has certified against MapR Technologies distribution. WHlie the company is officially coming out of stealth mode today, it already has a number of companies using its solution, including Entravision Communications Corporation, a media company that serves Latino markets.

Related Items:

Top Three Things Not To Do in Excel

Why Hadoop Won’t Replace Your Data Warehouse

Moving Beyond ‘Traditional’ Hadoop: What Comes Next?