Google Lauds Outside Influence on Apache Beam

Apache Beam started life as a decidedly Google-ish technology designed to mask the complexity inherent in building sophisticated analytic pipelines that run across distributed systems. But over the course of becoming a top level project at the Apache Software Foundation during the past year, the unified big data programming framework now boasts a more varied cast of supporting characters.

The input from people outside of the Googleplex has made Beam a better project, says Tyler Akidau, Apache Beam PMC and a staff software engineer at Google, which donated Beam source code to the ASF one year ago.

“Though there were many motivations behind the creation of Apache Beam, the one at the heart of everything was a desire to build an open and thriving community and ecosystem around this powerful model for data processing that so many of us at Google spent years refining,” Akidau wrote in a blog post today. “But taking a project with over a decade of engineering momentum behind it from within a single company and opening it to the world is no small feat. That’s why I feel today’s announcement is so meaningful.”

Akidau shared some statistics about the influence on Beam that came from outside the company. For starters, at least 10 of the 22 large modules in Beam were developed from scratch by the community, “with little to no contribution from Google,” he says.

“Since September,” he added, “no single organization has had more than [about] 50% of the unique contributors per month.” And the majority of new committers added during incubation came from outside Google.

Some of the big names contributing to Beam include Hadoop developer Cloudera, data integration and ETL software developer Talend, and data Artisans, the company behind Apache Flink. The e-payment transaction company PayPal is also working with Beam.

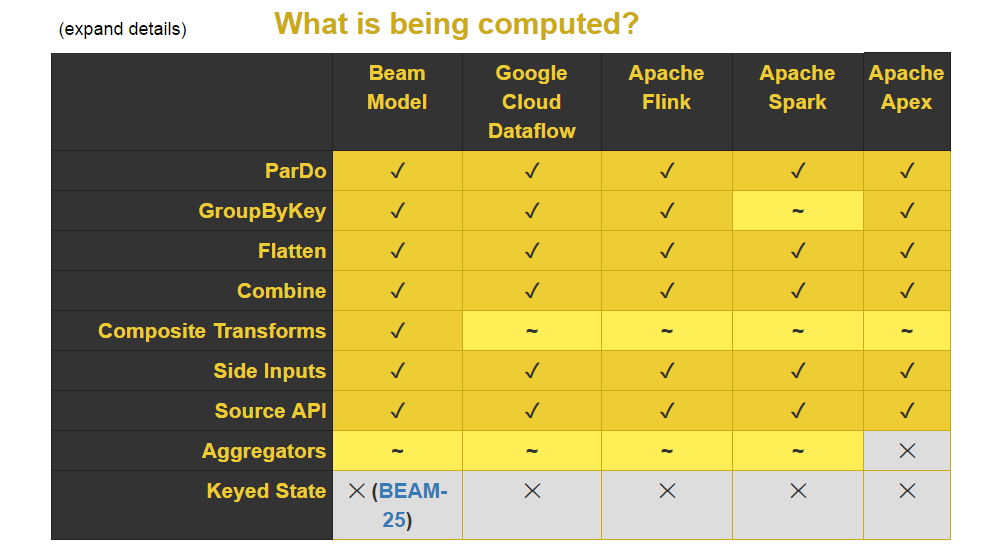

Part of the Apache Beam support matrix that you can find here (Source: Google)

PayPal’s Director of Big Data Platform Assaf Pinhasi says Apache Beam helps us by making stream processing accessible to a broad audience of data engineers through a single API that’s decoupled from the underlying execution engine. “Our data engineers can now focus on what they do best – i.e. express their processing pipelines easily, and not have to worry about how these get translated to the complex underlying engine they run on,” he says in a press release.

When Beam started incubating at the ASF, it featured three so-called “runners,” or underlying execution engines that the Beam API could use, including the Google Cloud Dataflow itself, and runners for Apache Spark and Apache Flink, which were in development. Akidau says Apache Beam now supports five runners, including one for Apache Apex, the real-time processing engine originally developed by DataTorrent, which was founded by former Yahoo engineers.

While it’s early days still for Apache Beam, the progress gives Akidau reason to be optimistic. “Naturally, graduation is only one milestone in the lifetime of the project, and we have many more ahead of us, but becoming top-level project is an indication that Apache Beam now has a development community that is ready for prime time,” he writes.

Moving forward, the Beam community will be “pushing forward the state of the art in stream and batch processing,” Akidau writes. “We’re ready to bring the promise of portability to programmatic data processing, much in the way SQL has done so for declarative data analysis. We’re ready to build the things that never would have gotten built had this project stayed confined within the walls of Google.”

Related Items:

Apache Beam’s Ambitious Goal: Unify Big Data Development

Google Reimagines MapReduce, Launches Dataflow