50 Years Of ETL: Can SQL For ETL Be Replaced?

(Wright Studio/Shutterstock)

It’s hard to imagine data warehousing without ETL (extract, transformation, and load). For decades, analysts and engineers have embraced no-code ETL solutions for increased maintainability. Does this mean that Structured Query Language (SQL) for ETL workflows is not used anymore? No. There are many examples where SQL has been the go-to choice for performing extractions and transformations. The question is, will this always be the case?

Building an ETL workflow can never be a fully automated process. Every problem is unique. Data from the source is diverse and dynamic. These multiple data sources require different connectors to bring the data to its destination.

The current generation of ETL software has wide access to the connectors and actions to perform data loads, orchestrations, and transformations. Actions in the managed ETL solutions are sufficient enough to take care of the transformations, albeit there aren’t any automated ways by which ETL tools can optimize and improve the performance of data transformation and loading.

Skillset To Build ETL Pipelines

The role of an ETL developer is not to just create workflows but to get a complete understanding of data sources, warehouses, and data pipelines. Thorough knowledge of database engines and a good grasp of SQL are skills required from an ETL developer.

Apart from SQL, other skills include a firm grasp of a programming language, such as Python or Scala, and a basic knowledge about the cloud components and how they work.

SQL is not confined to the traditional relational database systems (RDBMS) and data warehousing solutions. SQL-on-Hadoop engines run on top of distributed file systems to help process big data and build data lakes and data warehouses.

It is high time for organizations to agree that SQL should also be part of ETL developers’ skill sets.

Pitfalls of ETL Solutions

ETL solutions are fun, and it’s a pleasure to work with the drags and drops to transform the datasets. However, this is just the tip of the iceberg. There are a few concealed pitfalls to consider when one decides to go with ETL solutions:

- Staging tables that are dynamically created by ETL tools may not be optimized for transformations to be run on them. This could be the reason behind all those slow pipeline runtimes. Knowledge about SQL can help resolve this problem by manually creating tables to tap the maximum performance out of the pipelines;

- Choice of the right ETL tools is another factor that needs to be considered. When you are using databases that are not cloud-native on a cloud-native ETL solution, the performance can be deteriorating for certain edge cases. Choose the one that’s right and compatible with your source;

- There’s a high probability that you might get stuck on some operation on the data where the ETL action’s result is inaccurate compared to the result that you might be expecting. One common example is the way NULLs are handled in different ETL solutions. When a GROUP BY operation is performed on the dataset, some ETL tools will be counting the NULL values while others won’t;

- Most of the ETL tools out there neither consider the definition of the source tables nor do they examine the query plan for the transformation that you have assigned to the workflow beforehand and suggest changes for improvements;

- Unless the staging tables are clearly defined, there isn’t any way to recover the data that are missed during the transit or pipeline failures.

SQL to the Rescue

SQL can solve complex transformations and provide an edge over other ETL actions. This doesn’t mean that ETL solutions are deemed useless. ETL solutions are better in data orchestration and they provide precise maintainable and reusable data pipeline structures that can be collaborated easily with other members of the team.

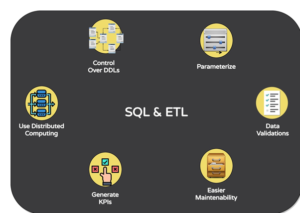

Can a hybrid approach be adopted by combining the best of both worlds – SQL and ETL? Indeed, that is the need of the hour.

The following are the ways by which SQL can co-exist with a code-free ETL solution.

- The current generation ETL solutions in the market are equipped with a SQL action, which the users can use to run any SQL queries (DDLs, Stored Procedures, Index Creations, you name it!). This feature of ETL tools is indispensable for building a robust and hybrid data pipeline;

- Data validations can be done in a combined manner where part of the validation such as data type checks can be done using SQL whereas other integrity checks like foreign key constraints, NULL checks, and duplicates are straightforward to be identified in ETL solutions;

- Data cleaning and transformation for high volume data needs more distributed computing power to process the transformation. The ETL solutions are capable of using Hadoop or Spark-based executors that can run processing jobs containing SQL-based data transformations on top of the object-store. At a nominal price, depending on the volume the data is effectively processed using external executors;

- By moving the transformation logic to SQL, there is a separation between the ETL pipeline and the SQL scripts. This helps in easier maintainability and ease in running tests on the SQL scripts;

- The parameterization of the workflows allows the parameters such as dates to be dynamically passed to the SQL statements and executed to bring the transformed delta data to the destination;

- The work of SQL doesn’t end with the ETL completion. SQL is what drives Business Intelligence by obtaining the Key Performance Indicators (KPIs) based on effective grouping of the required columns. Generation of these KPI tables involving a refresh of the derived tables is done based on API calls triggered from the ETL pipelines to populate the cube.

Thus, ETL tools are never complete without SQL. No-code ETL solutions are the future of ETL processing. However, a dash of SQL will always be preferred to complete the last mile. The bottom line is, even the market leaders of Data Integration should consider the usage of SQL inside the data pipelines.

About the author: John Martinez is a tech nerd currently working at Dataquest. He spends his time creating content, playing chess, and pretending like he knows how to cook.

Related Items:

ETL vs ELT – What is the Difference?