What We Can Learn From Famous Data Quality Disasters in Pop Culture

(Studiostoks/Shutterstock)

Bad data can lead to disasters that cost hundreds of millions of dollars or — believe it or not — even the loss of a spacecraft.

Without processes that guard the integrity of your data every step of the way, your organization might suffer catastrophic mistakes that erode trust and lose a fortune. As a reminder to make sure that high-quality data is an end-to-end priority for all types of industries, let’s look at some of the biggest data quality incidents in recent pop culture history.

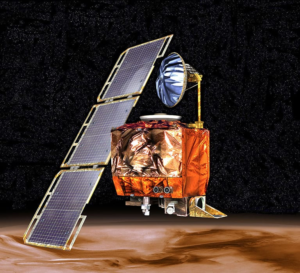

NASA: Lost a Mars Orbiter Worth $125 Million Due to a Data Units Error

In 1999, NASA lost a Mars orbiter that cost $125 million because the Lockheed Martin engineering team used Imperial units while NASA used metric units.

Because the data’s units didn’t match, information couldn’t transfer from the Lockheed Martin team in Denver to the NASA flight team in Pasadena, California. Without good data, NASA’s flight team couldn’t land the orbiter on the red planet, and the hundred million dollar piece of technology crashed on the surface.

IT Chronicles reports, “The problem was that one piece of software supplied by Lockheed Martin calculated the force the thrusters needed to exert in pounds of force — but a second piece of software, supplied by NASA, took in the data assuming it was in the metric unit, newtons. This resulted in the craft dipping 105 miles closer to the planet than expected — causing its total incineration, setting NASA years back in its quest to learn more about Mars, and a $327.6 million mission being burnt up in space.”

But NASA is not alone in battling bad data. IT Chronicles says 60% of companies have unreliable data health. Even if the stakes aren’t as high as landing a spacecraft, you should take steps to maintain the integrity of your data.

Tom Gavin, the Jet Propulsion Laboratory administrator to whom all project managers reported, said about their mishap: “This [was] an end-to-end process problem. … Something went wrong in our system processes in checks and balances that we have that should have caught this and fixed it.”

So what should be done to ensure the health of your data? First, managers at every level of your organization need to be invested in a good process that assures high-quality data. Next, even if you’re not headed to Mars, be sure to run quality assurance tests on your data before major launches. Most importantly, don’t rely on one person in your organization to look after your data. Make it an organizational priority.

While one person didn’t notice the discrepancy in metrics, that wasn’t the failure. As Gavin said, “People make errors. … It was the failure of us to look at it end-to-end and find it. It’s unfair to rely on any one person.”

Amsterdam’s City Council: Lost €188 Million Due to Housing Benefits Error

In 2014, when Amsterdam used software programmed in cents instead of euros, it sent out €188 million to poor families instead of €1.8 million and was forced to ask for the extra money to be returned.

The details of Amsterdam’s error are astonishing. Citizens who would have regularly received €155 instead were sent €15,500. Some even got as much as €34,000! However — and equally as mind-blowing — nothing in the software alerted administrators, and no one in the city government noticed the error.

Data quality disasters like Amsterdam’s blunder are bad for leadership and morale. When Pieter Hilhorst was appointed Amsterdam’s finance director the year prior to the snafu, he already faced opposition due to a lack of experience. After the 2014 disaster, Hilhorst was forced to order a costly KPMG investigation into how the data error happened, said the Irish Times. Because of the error, and unexpected rise and fall in income, some Amsterdam residents faced financial hardship, including running up debts. Ultimately, after all the problems it caused, the city government had to apologize “unreservedly,” which is never a position any leader wants to be in.

To avoid a monumental mistake like Amsterdam made, make sure that leadership is invested in high-quality data from the beginning. Instead of hiring a consultancy to find out why a mistake happened after the fact, experts recommend running a premortem. In a premortem, members of your organization try to suss out weak spots in advance of your project going live. Such “prospective hindsight” increases the ability to identify reasons for future outcomes by 30%, according to research.

Despite all the problems with Amsterdam’s data disaster, there’s one silver lining that restores faith in humanity. In an amazing display of responsibility by Amsterdam’s neediest, all but €2.4 million of the extra payments were returned to the city’s coffers!

Data Quality Disasters Are All Too Common

We’ve already looked at a couple of spectacular disasters, but data mishaps are all too common in everyday business.

Data reliability is a massive problem across organizations, even if they aren’t crashing rockets on Mars. It’s no wonder, then, that data teams are reporting that data quality has risen to the top priority KPI. Of course, tackling the issue of data quality is a massive topic on its own with a variety of best practices and principles that are still emerging.

For now, the best advice for maintaining the highest possible quality data, while hitting tight deadlines and iterating quickly, is for organizations to move their data reliability as far “to the left” as possible. This means picking up on errors early in the process with proactive data quality and consistent testing. By staying ahead of these potential problems, companies and other organizations can avoid the embarrassment and financial losses from data errors like these.

About the author: Gleb Mezhanskiy is founding CEO of Datafold, a data reliability platform that helps data teams deliver reliable data products faster. He has led data science and product areas at companies of all stages. As a founding member of data teams at Lyft and Autodesk and head of product at Phantom Auto, Gleb built some of the world’s largest and most sophisticated data platforms, including essential tools for data discovery, ETL development, forecasting, and anomaly detection. Visit Datafold at www.datafold.com/, and follow the company on Twitter, LinkedIn, Facebook and YouTube.

Related Items:

Kinks in the Data Supply Chain

Room for Improvement in Data Quality, Report Says