Are Neural Nets the Next Big Thing in Search?

(Andy Chipus/Shutterstock)

Many, if not most, search engines in use today are based on keywords, in which the search engine attempts to find the best match for a word or set of words used as input. It’s a tried-and-true method that has been deployed millions of times over decades of use. But new search approaches based on deep learning, including vector search and neural search, have emerged recently, and early backers say they have the potential to shake up the search market.

Vector search uses a fundamentally different approach to finding the best fit between a term provided as input to the engine and the result that is presented to the user. Instead of powering the search by doing a direct one-to-one matching of keywords, in vector search, the engine attempts to match the input term to a vector, which is an array of features generated from objects in the catalog.

This approach relies on a machine learning (or deep learning) model to derive those features from the objects in the catalog. Once the ML or DL model converts the features of the objects into a two-dimensional vector with tens to hundreds of dimensions, the user can execute the search against that vector to generate the search results.

Vector search can potentially provide better search results because it’s providing richer context and more nuance than a basic keyword search can provide. In addition to generating vectors from groups of words, they can be generated from images, video, and audio too.

One early believer in vector search is Bob Wiederhold, the former Couchbase CEO who is now an independent advisor and investor in tech startups. Recently Wiederhold started working with a startup named Pinecone, which has developed a vector database that is being used to power search engines, among other use cases.

“Elastic is dominating the third-generation, keyword search-type approach. What the next-generation players are leaning towards is this more of this machine learning-driven approach,” Wiederhold says. “Basically, what Pinecone does is we use machine learning technology to take…that text-based input and convert that into vectors.”

Instead of finding an exact match with a limited number of keywords, vector search uses a fuzzier approach that seeks to find the best approximation among a much wider array of semantic meanings embedded in the vectors. “The idea is that basically you’re looking for nearest neighbors,” Wiederhold says. “From a vector perspire, you’re looking for something close to the input vector in your vector database.”

It’s up to Pinecone’s customers to generate the vectors using machine learning models. There are so many good machine learning tools on the market, including transformer models like GPT-3 and BERT, that Pinecone didn’t want to touch that space. Instead, Pinecone provides another way to take the vectors generated from those models and put them to use in a new way.

It’s all about delivering a better user experience, says Greg Kogan, Pinecone’s vice president of marketing. “It provides more relevant results because, instead of an exact keyword match, it’s actually searching by the meaning of that query, and comparing it against the meaning of known documents,” he says.

The San Mateo, California-based company envisions a number of use cases for its vector database, including semantic search, unstructured data search, deduplication and record matching, detection and classification, and recommendations and rankings. It sees applications in security and improving search in enterprise applications. With the version 2.0 launch of its offering last fall, the company has been inundated with business, Wiederhold says.

The tech giants may have started with keyword searches, but they have moved to implement vector searches in recent years. That’s a core reason why the search experience on the tech giants’ products is so much better, Kogan says.

“The big Internet companies are doing this, and that’s in part why Facebook seems to know your friends and Spotify seems to know your music tastes better than you do and Amazon knows your shopping preference and what you want to buy next,” Kogan says.

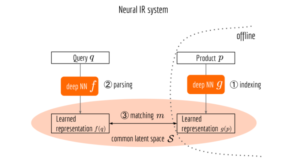

Pinecone isn’t the only company building tools for vector search. Berlin-based Jina.ai, which just raised a $30 million Series A, develops a neural search engine that uses deep learning to go beyond traditional keyword search. The company’s co-founder and CEO, Han Xiao, described how he used TensorFlow to build a neural search engine in an informative blog post in 2018.

“At the core, Solr/Elasticsearch is a symbolic information retrieval (IR) system,” Xiao writes. “Mapping query and document to a common string space is crucial to the search quality. This mapping process is an NLP pipeline implemented with Lucene Analyzer.”

However, the symbolic IR approach suffers from several drawbacks, he writes, including fragility, complicated dependencies, difficulty in improving search quality, and the ability to easily scale to multiple languages.

Building a neural search system from query logs in TensorFlow brings advantages, including being “less prone to spelling errors, leverages underlying semantics better, and scales out to multiple languages much easier,” he writes.

While the neural search approach is more resilient to input noise and requires little domain knowledge about the products and languages, it’s not a slam dunk over symbolic IR, Xiao writes. “Both systems have their own advantages and can complement each other pretty well,” he writes. “A better solution would be combining these two systems in a way that we can enjoy all advantages from both sides.”

Another company working on neural search is Search.io. The San Francisco startup has developed a proprietary product that utilizes vector search in concert with neural hashes to deliver what it claims is fast and accurate searches.

“Vector-based search engines correct for many of the problems of keyword search alone,” says Hamish Ogilivy, the company’s CEO and founder. “You’ll find companies now adding additional vector search on top of their keyword search. The problem with this is that vector search is slow and often expensive.”

The company advocates its offering, called NeuralSearch, as “a new type of AI-powered search engine that utilizes neural hashes to compress and drastically speed up queries.” Ogilvy recently wrote about his approach in a post on Medium titled “Vectors are over, hashes are the future of AI.”

“Search tech has lagged databases mainly due to language problems, yet we’ve seen a revolution in language processing over the last few years and it’s still speeding up!” he writes. “From a tech perspective, we see neural based hashes dropping the barrier for new search and database technology.”

Enterprise search vendor Sinequa is also adopting neural search with its solutions. Up to this point, search technology has primarily been focused on counting words and statistical analysis of word forms, with some enhancement from Natural Language Processing (NLP), according to the company’s vice president of product strategy, Jeff Evernham. But that is changing.

“The advent of large language models (such as Google’s BERT – and soon to be Google MUM) changed that, bringing Natural Language Understanding to Web search,” Evernham says. “But its complexity and need for computing power have kept these advances out of enterprise search. Until now. Advances in neural search and applying this technology efficiently for corporate environments will come to the forefront and transform the enterprise search experience by bringing an unprecedented level of accuracy and contextual relevance by understanding meaning.”

Evernham says to expect incremental adoption in early 2022, with neural search capabilities “accelerating from a nice-to-have to absolutely essential” over the course of the year. “Expect a tremendous amount of market noise…and confusion…around this topic, as providers struggle to deliver and customers grapple to apply NLU,” he says.

Keyword search is so embedded in the tech stack that it will probably never go away. But that’s not stopping developers from creating new ML-based approaches that can build upon the success of keyword search and deliver better experiences for users, which is the ultimate goal at the end of the day.

Related Items:

Think Search Is Solved? Think Again

What Is An Insight Engine? And Other Questions

Rethinking Enterprise Search for the Big Data Age

Editor’s note: This story was updated January 20 with comments from Sinequa.