Databricks Opens Up Its Delta Lakehouse at Data + AI Summit

Databricks, which had faced criticism of running a closed lakehouse, is open sourcing most of the technology behind Delta Lake, including its APIs, with the launch of Delta Lake 2.0. That was one of a number of announcements the company made today at its Data + AI Summit in San Francisco.

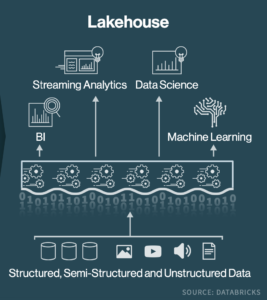

Databricks has established itself as a leader in data lakehouses, an emergent data concept that seeks to meld the controlled governance of a data warehouse with the flexibility and scalability of a data lake. Several cloud vendors, including AWS, Google Cloud, and Snowflake, have embraced the lakehouse concept with their own solutions.

While Databricks’ has gained traction with its lakehouse platform, called Delta Lake, the company has been criticized by industry players for not embracing open standards. Specifically, vendors like Dremio have cited the closed nature of the Delta Lake table format compared to open formats like Apache Iceberg, which its technologists favor as part of an open data ecosystem.

But with today’s unveiling of Delta Lake 2.0, Databricks is moving strongly towards open standards by open sourcing all of the APIs. The documentation for Delta Lake 2.0, which is currently available as a release candidate with general availability expected later this year, will be made available through the Linux Foundation.

Databricks has been quietly open sourcing many of its Delta Lake capabilities behind the scenes in jiras, said Databricks CEO and co-founder Ali Ghodsi.

“We actually have over the last few months secretly open sourced most of them, and there are a few more to come,” Ghodsi said in a press conference yesterday. “So we are actually open sourcing all of them, and we’ll continue to do that. So no more proprietary Delta capabilities.”

Customers can use Iceberg’s table format to enable analytics and machine learning workloads in Delta Lake, company officials say. But the folks at Databricks obviously favor their own table format, and some of its customers do, too, including Akamai, the large content delivery network that stashes hosts large chunks of the Web for faster response times.

“Databricks provides Akamai with a table storage format that is open and battle-tested for demanding workloads such as ours,” Aryeh Sivan, Akamai’s vice president of engineering, stated in a press release. “We are very excited about the rapid innovation that Databricks, along with the rapidly growing community, is bringing to Delta Lake. We are also looking forward to collaborating with other developers on the project to move the data community to greater heights.”

Linux Foundation Executive Director Jim Zemlin says the Delta Lake project is seeing strong growth and contributions from companies like Walmart, Uber, and CloudBees. “Contributor strength has increased by 60% during the last year and the growth in total commits is up 95% and the average lines of code per commit is up 900%,” Zemlin said in a press release.

While there are obvious benefits to open standards, Databricks is not giving up on its proprietary development practices. In fact, the company considers its proprietary development efforts to be a big advantage, especially when developing enterprise software.

“It’s actually pretty complicated to develop software and make sure it has high quality, and doing that in open source is actually quite costly, working with the community on all those things,” Ghodsi said. “We found that we can move faster, build the proprietary version, and then open source it when it’s battle tested, like we did with Delta. We found that…more effective. We can move faster that way. We can iterate quickly with our customer and get it to a mature state before we open source it.”

Databricks keeps strategic products closed source. For example, it currently has no plans to open source Photon, the speedy C++ layer for Apache Spark that Databricks claims is the “secret sauce” behind its big performance advantage in SQL analytic workloads. The company is rolling out new benchmarks this week that show Delta Lake with sizable performance advantages over Snowflake (although we have yet to see the actual benchmark documents).

“For us, the whole business model is keep open sourcing and keep working on the next innovation,” Ghodsi said. “And over time we’ll keep open sourcing more and more.”

Databricks made a number of other announcements at Data + AI Summit, including:

- MLflow 2.0, which introduces a new feature called MLflow Pipelines;

- Project Lightspeed, a next generation Spark Structured Streaming engine;

- Spark Connect, which enables the use of Spark on virtually any device;

- The general availability of Photon;

- A preview of Databricks SQL Serverless on AWS;

- Open source connectors for Go, Node.js, and Python;

- Databricks SQL CLI, which enables developers and analysts to run queries directly from their local computers;

- Support for query federation in the lakehouse;

- A pipeline optimizer in the Delta Live Tables ETL offering;

- Pending GA of the Unity catalog on AWS and Azure;

- The unveiling of Databricks Marketplace;

- Data “clean rooms” for safe sharing of data.

Stay tuned to Datanami for more on these announcements, plus reports of the keynote addresses given by Ghodsi and other Databricks executives and industry experts at the Data + AI Summit, which continues through Thursday.

Related Items:

All Eyes on Snowflake and Databricks in 2022

Databricks Ships New ETL Data Pipeline Solution

Databricks Unveils Data Sharing, ETL, and Governance Solutions