Research Experiment Pits ChatGPT Against Google Search, ChatGPT Wins

(Source: Preply)

Online language tutoring platform Preply recently analyzed the performance of Google search versus ChatGPT in a research experiment, finding that ChatGPT beat Google 23 to 16 with one tie.

For this experiment, the company says it assembled a research team made up of communication and search engine experts to assess the performance of each AI in responding to a list of 40 curated questions.

“Some of the questions are frequently asked of search engines, while others embody unique challenges of intelligence. The questions cover a wide range of topics—from civics to sex—and they require answers both long and short, subjective and objective. Some are practical, and others are existential. A few are based on false premises. Others are morally sensitive, and at least one is unanswerable,” the company said in a blog post detailing the research.

The responses were scored on how well they adhered to the following 12 characteristics: Actionable, clear, comprehensive, concise, context-rich, current, decisive, detailed, efficient, functional, impartial, and thoughtful.

Example questions included how to tie a tie, whether or not Santa Claus is real, and whether it is safe for pregnant women to drink alcohol. Google gave a more functional answer on how to tie a tie through a step-by-step video. ChatGPT answered the question regarding Santa Claus with a response that was more clear, more concise, and more contextual. Google’s answer to the question about the safety of alcohol during pregnancy was more clear and more decisive than ChatGPT’s.

This table shows some of the results of Preply’s research scoring ChatGPT and Google Search’s responses to a set of 40 questions. (Source: Preply)

Here are Preply’s results:

- ChatGPT beats Google, 23 to 16, with one tie.

- On basic questions, Google wins 7 to 4, with one tie. On intermediate questions, ChatGPT wins 15 to 6. On advanced questions, ChatGPT wins 4 to 3.

- On low stakes questions, Chat GPT wins 9 to 8, with one tie. On medium stakes questions, ChatGPT wins 10 to 1. On high stakes questions, Google wins 7 to 4.

- On objective questions, ChatGPT wins 15 to 9, with one tie. On subjective questions, ChatGPT wins 8 to 7.

- On static questions, ChatGPT wins 18 to 7, with one tie. On fluid questions, Google wins 9 to 5.

Preply says the main takeaways from this experiment are that Google enjoys a significant advantage due to its access to real-time information. It is also more agile and voluminous as a research tool given how many resources a Google search pulls up in its results. However, the company says Google’s buffet-style approach to answering questions comes with the cost of a sometimes confusing or distracting experience. Interestingly, the company asserts that ChatGPT’s responses often read as wiser and more mature, sounding like dialogue from a trusted teacher, which may appeal more to our prefrontal cortex versus our brain’s limbic system.

“Whereas ChatGPT almost always strikes a measured tone and offers thoughtful context, Google search results often reflect a more base human nature, filled with loud sales pitches and reductive framings intended to capture attention fast, at the expense of nuance and, sometimes, truth,” the company said. “Google is a quick and dynamic information delivery system, but one that appears to fail almost as much as it succeeds by putting users in front of the content we don’t want, in front of content that’s reductive, or simply leaving us to do a lot of the work ourselves.”

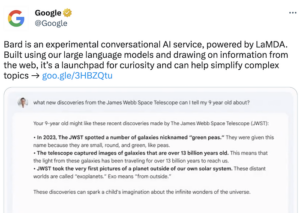

This screenshot shows the Twitter ad for Google’s Bard chatbot that gave an inaccurate fact about the James Webb Telescope. (Source: Google’s Twitter)

It will be interesting to see how the AI-powered search arms race between Google and Microsoft plays out. There were certainly a few captivating developments last week. At a surprise event, Microsoft announced it is working with OpenAI to develop an even more powerful large language model than the one behind ChatGPT to supercharge its Bing search engine (GPT-4, perhaps?). Google fired back with the reveal of its own AI search assistant, Bard, as well as a live event from Paris. The lackluster event gave little new information about Bard, and many felt it was a rehashing of previous Google I/O presentations.

Inaccuracies inherent in this technology can have real world consequences, as Google found out. Astute viewers of a Twitter ad for Bard noticed an inaccuracy the chatbot gave about the James Webb Telescope being the first to photograph exoplanets, though it was not. The news broke shortly after Wednesday’s event, causing Google to lose $100 billion of its market value, or about 8% of its stock price.

Read a detailed account of Preply’s research at this link.

Related Items:

Chatbot Search Wars Intensify with Google’s Bard and Microsoft’s Unveiling of AI-powered Bing