AI Researchers Issue Warning: Treat AI Risks as Global Priority

(Michael Traitov/Shutterstock)

Does artificial intelligence represent an existential threat to humanity along the lines of nuclear war and pandemics? A new statement signed by AI scientists and other notable figures says it should be treated as such.

The statement reads: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” The 22-word statement was published by the Center for AI Safety (CAIS), a San Francisco-based non-profit organization that said the statement is aimed at opening a global discussion about AI’s urgent risks.

Topping the list of signatories is Geoffrey Hinton, an AI research pioneer who recently left Google to have more freedom to sound the alarm regarding what he calls the clear and present danger presented by AI, saying, “These things are getting smarter than us.”

Hinton is one of the founders of the deep learning methods employed by large language models like GPT-4, along with fellow endorser Yoshua Bengio. Notably absent from the list is the third member of the Turing Award-winning research team–Yann LeCun, chief AI scientist at Meta.

The statement was also signed by several CEOS of major AI players including OpenAI’s Sam Altman, Google DeepMind’s Demis Hassabis, and Anthropic’s Dario Amodei.

A section of CAIS’s website lists potential AI risks including weaponization, misinformation, deception, power-seeking behavior, and more. Regarding AI’s possible attempt at a power grab, the website says: “AIs that acquire substantial power can become especially dangerous if they are not aligned with human values. Power-seeking behavior can also incentivize systems to pretend to be aligned, collude with other AIs, overpower monitors, and so on. On this view, inventing machines that are more powerful than us is playing with fire.”

The organization also asserts that building this power-hungry AI may be incentivized by political leaders who see its strategic advantages, quoting Vladimir Putin who said, “Whoever becomes the leader in [AI] will become the ruler of the world.”

The CAIS statement marks the latest in a series of high-profile initiatives focused on addressing AI safety. Earlier in the year, a controversial open letter—supported by some of the same individuals endorsing the current warning—urged a six-month hiatus in AI development that was met with mixed reactions within the scientific community. Critics argued that the letter either overstated the risk posed by AI or, conversely, they agreed with the potential risk but disagreed with the proposed solution.

The Future of Life Institute (FLI) authored the previous open letter and had this to say about the CAIS statement: “Although FLI did not develop this statement, we strongly support it, and believe the progress in regulating nuclear technology and synthetic biology is instructive for mitigating AI risk.”

FLI recommends a series of actions in mitigating this risk including developing and instituting international agreements to limit particularly high-risk AI proliferation and mitigate the risks of advanced AI, as well as founding intergovernmental organizations, similar to the International Atomic Energy Agency (IAEA), to promote peaceful uses of AI while mitigating risk and ensuring guardrails are enforced.

Some experts are calling these open letters misguided and that AGI, or autonomous systems with general intelligence, is not the most pressing concern. Emily Bender, a professor of computational linguistics at the University of Washington and a member of the famed AI ethics team fired by Google in 2020, said in a tweet that the statement is a “wall of shame where people are voluntarily adding their own names.”

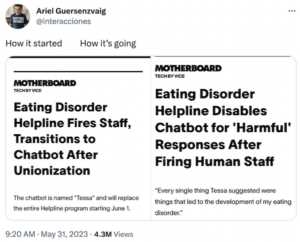

This meme circulating on Twitter highlights the more immediate risks of AI harm. (Source: Twitter)

“We should be concerned by the real harms that [corporations] and the people who make them up are doing in the name of ‘AI,’ not [about] Skynet,” she wrote.

One of these harms can be seen in the example of an eating disorder helpline that recently fired its human team to employ a chatbot called Tessa. The helpline has been active for 20 years and is run by the National Eating Disorder Association. A report from Vice noted that after NEDA workers moved to unionize early last month, the association announced it would replace the helpline with Tessa as the group’s main support system.

Tessa was taken offline by the organization just two days before it was set to take over due to the chatbot encouraging damaging behaviors that could make eating disorders worse, such as severely restricting calories and weighing oneself daily.

Related Items:

Open Letter Urges Pause on AI Research