There Are Four Types of Data Observability. Which One is Right for You?

(Hangouts-Vector-Pro/Shutterstock)

Suppose you maintain a large set of data pipelines from external and internal systems, data warehouses, and streaming sources. How do you ensure that your data meets expectations after every transformation? That’s where data observability comes in. While the term data observability has been used broadly, it is essential to understand the different types of data observability solutions to pick the right tool for your use case.

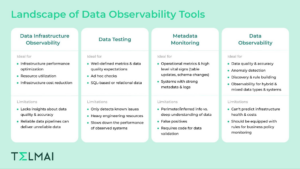

There are four types of data observability solutions:

● Data infrastructure observability

● Data testing

● Metadata monitoring

● Data observability

This article will discuss each of these solutions, their pros and cons, and the best use cases for each one.

1. Data Infrastrastucture Observability

As the name suggests, this type of observability is about the infrastructure in which the data is stored, shared, or processed. This type of observability focuses on eliminating operational blindspots and performance issues and reducing cost and infrastructure spending.

As data volumes increase, organizations continuously add more computing power and resources to handle their operational demands. This type of observability can help manage rising costs and outages.

Ideal Use Cases for Data Infrastructure Observability

Price and Capacity Optimization: These tools can identify overprovisioned and unused resources to help lower unexpected expenses. For example, they can monitor the usage of Snowflake or Databricks clusters and optimize the compute vs. costs of each. They also provide spend forecasting to help plan contracts, analyze current and projected spend, and track department-level budgeting and chargebacks.

Performance Improvements: Data infrastructure observability tools can identify long-running queries, bottlenecks, and performance improvement opportunities by analyzing data workloads. They offer built-in alerting that automatically notifies system admins about potential slowdowns and outages. They also offer performance simulation packages to help DataOps teams optimize the performance of existing resources and tune their systems for best resource utilization.

2. Data Testing

Data testing uses a set of rules to check if the data conforms to specific requirements. Data tests can be implemented throughout a data pipeline, from the ingestion point to the destination. A test validates a single data object at one particular point in the data pipeline.

For example, one test evaluates a field by comparing it to a business rule, such as a specific date format. Another test verifies the frequency of each date, and so on. As you can imagine, this process can be laborious, where for every business rule, a new test needs to be written, verified and maintained.

Ideal Use Cases for Data Testing

A Natural Fit for an ELT Approach: Today’s cloud data platforms, such as BigQuery, Snowflake, or Databricks Lakehouse, offer storage and computing power and the ability to process structured, semi-structured, and unstructured data. Therefore, organizations often use an ELT process to extract, load, and

A Natural Fit for an ELT Approach: Today’s cloud data platforms, such as BigQuery, Snowflake, or Databricks Lakehouse, offer storage and computing power and the ability to process structured, semi-structured, and unstructured data. Therefore, organizations often use an ELT process to extract, load, and

store data from various sources into these technologies and then subsequently use the computing and validation capabilities of these tools to clean and test the data for analysis. Data testing and validation frameworks fit right into this model.

Spot Checking in Legacy Stacks: Organizations that transform data using legacy ETL tooling to build aggregated data in cubes and data warehouse tables typically set up tests throughout the data pipeline and at every step to ensure the data stays consistent as it gets processed.

Model Validation: An essential part of building predictive models is testing the model’s performance against real-life test data sets before putting it into

production. After the model is created using a training data set, the values predicted by the model are compared to a validation/test data set. These comparison tests span from simple SQL checks to computer vision validation testing.

3. Metadata Monitoring

Instead of writing specific rules to assess if the data values meet your requirements, metadata monitoring looks into system logs and metadata to infer information about the health of the data pipelines. It constantly checks metrics such as schema changes, row counts, and table update timestamps and compares this information against historical thresholds to alert on abnormalities.

These tools provide high-level vital signs about the data to alleviate data engineering workloads. However, to ensure the quality and accuracy of data, they run queries against the underlying database to validate data values. This often overloads the data warehouse, impacts its performance, and increases its usage costs.

Ideal Use Cases for Metadata Monitoring

Operational Health of Data Warehouses: With out-of-the-box integrations to various databases and data warehouses, metadata monitoring tools eliminate engineering overhead in developing monitors that read each system’s metadata and logs. These tools track data warehouse operations and ensure it is up and running with no significant downtimes.

Incident Management: Using custom monitors that check for expected behavior, these tools can flag out-of-norm changes in data loads, issue tickets, assign incidents to the right teams, and reroute details to alerting tools for full resolution. Although a reactive strategy, this approach is useful

for building SLA between data teams and manually adjusting upstream data tests to prevent future issues.

Reports and Dashboards Integrity: Metadata observability tools have discovery capabilities in recognizing the upstream tables and schemas that feed critical business reports. They monitor and detect any changes in the schema and data loads of these tables to alert and notify downstream data owners

about potential issues.

A Starting Place for Building Further Data Testing: Often if an organization’s data pipelines have been put together over the years, data quality has not been prioritized. Metadata observability can help these organizations detect the most significant points of failure as a starting point for further testing and developing data accuracy checks.

4. Data Observability

Data observability is a deeper level of observability than metadata monitoring or data testing. It focuses on learning about the data itself and its patterns and drifts over time to ensure a higher level of reliability and trust in data.

Because in data observability, the data itself is the object of observation, not its metadata, the use cases are much broader. Data observability also spans beyond point-in-time data tests. Instead, it continuously learns from the data, detects its changes over time, and establishes a baseline to predict future expectations.

Ideal Use Cases for Data Observability

Anomaly Detection: Data is constantly changing. Data observability tools use ML and anomaly detection techniques to flag anomalous values in data at the

first scan (i.e., discovering values that fall outside normal distributions) as well as over time (i.e., drifts in data values using time series) and learn from historical patterns to predict future values. Data testing tools, on the other hand, have blindspots to changes in data, and metadata monitoring tools are simply not equipped to catch outliers in data values and patterns.

Business KPI Drifts: Since data observability tools monitor the data itself, they are often used to track business KPIs just as much as they track data quality drifts. For example, they can monitor the range of transaction amounts and notify where spikes or unusual values are detected. This autopilot system will show outliers in bad data and help increase trust in good data.

Data Quality Rule Building: Data observability tools have automated pattern detection, advanced profiling, and time series capabilities and, therefore, can be used to discover and investigate quality issues in historical data to help build and shape the rules that should govern the data going forward.

Observability for a Hybrid Data Ecosystem: Today, data stacks consist of data lakes, warehouses, streaming sources, structured, semi-structured, and unstructured data, API calls, and much more. The more complex the data pipeline, the harder it is to monitor and detect its quality and reliability issues.

Unlike metadata monitoring that is limited to sources with sufficient metadata and system logs – a property that streaming data or APIs don’t offer – data observability cuts through to the data itself and does not rely on these utilities. This opens observability to hybrid data stacks and complex data pipelines.

Shift to the Left for Upstream Data Checks: Since data observability tools discover data reliability issues in all data sources, they can be plugged in upstream as early as data ingest. This helps prevent data issues from manifesting into many shapes and formats downstream and nips the root cause of data incidents at the source. The results? A much less reactive data reliability approach and faster time to detect and faster time to resolve

data quality issues.

Closing Notes

We explored four types of data observability and the best use cases for each. While all four are integral parts of data reliability engineering, they differ vastly. The table above elaborates on their differences and shows how and where to implement each. Ultimately, it is the key needs of the business that determines which solution is best.

About the author: Farnaz Erfan is the founding head of growth at Telmai, a provider of observability tools. Farnaz is a product and go-to-market leader with over 20 years of experience in data and analytics. She has spent her career driving product and growth strategies in startups and enterprises such as Telmai, Marqeta, Paxata, Birst, Pentaho, and IBM. Farnaz holds a bachelor of science in computer science from Purdue University and spent the first part of her career as a software engineer building data products.

head of growth at Telmai, a provider of observability tools. Farnaz is a product and go-to-market leader with over 20 years of experience in data and analytics. She has spent her career driving product and growth strategies in startups and enterprises such as Telmai, Marqeta, Paxata, Birst, Pentaho, and IBM. Farnaz holds a bachelor of science in computer science from Purdue University and spent the first part of her career as a software engineer building data products.

Related Items:

Observability Primed for a Breakout 2023: Prediction

Why Roblox Picked VictoriaMetrics for Observability Data Overhaul

Companies Drowning in Observability Data, Dynatrace Says

December 20, 2024

- Reltio Recognized as Best-of-Breed Representative Vendor in 2024 Gartner Market Guide for Master Data Management Solutions

- CapStorm Releases Salesforce Connector Offering Seamless Data Integration with Snowflake

- LogicMonitor and AppDirect Partner to Bring Hybrid Observability Solutions to IT Service Providers

- Patronus AI Launches Small, High-Performance Judge Model for Fast and Explainable AI Evaluations

- Equinix Unveils Private AI Solution with Dell and NVIDIA for Secure, Scalable AI Workloads

December 19, 2024

- Hydrolix Reports Technology Partner Ecosystem Momentum

- EQTY Lab, Intel, and NVIDIA Introduce Verifiable Compute AI Framework for Governed AI Workflows

- NeuroBlade Empowers Next-Gen Data Analytics on New Amazon Elastic Compute Cloud F2 Instances

- Quantum Announces Support for NVIDIA GPUDirect Storage with Myriad All-Flash File System

- Kurrent Charges Forward with $12M for Event-Native Data Platform

- Timescale Details PostgreSQL’s Growing Adoption Across Industries in 2024

- Altair Enhances RapidMiner with Graph-Powered AI Agent Framework

- Esri Releases 2024 Update of Ready-to-Use US Census Bureau Data for ArcGIS Users

December 18, 2024

- Qlik Shares 2025 AI and Data Trends: Authenticity, Applied Value, and Agents

- Domo Releases 12th Annual ‘Data Never Sleeps’ Report

- Dresner Advisory Services Publishes 2024 Embedded Business Intelligence Market Study

- Starburst Helps Arity Streamline Data Insights with Scalable Lakehouse Architecture

- Vultr Expands Global Reach with New Funding at $3.5B Valuation

- Ataccama Extends Generative AI Capabilities to Accelerate Enterprise Data Quality Initiatives

- Menlo Ventures Announces Cohort Backed by $100M Anthology Fund Launched in Partnership with Anthropic