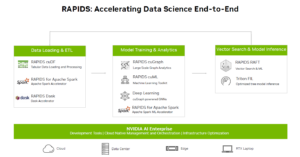

Nvidia Bolsters RAPIDS Graph Analytics with NetworkX Expansion

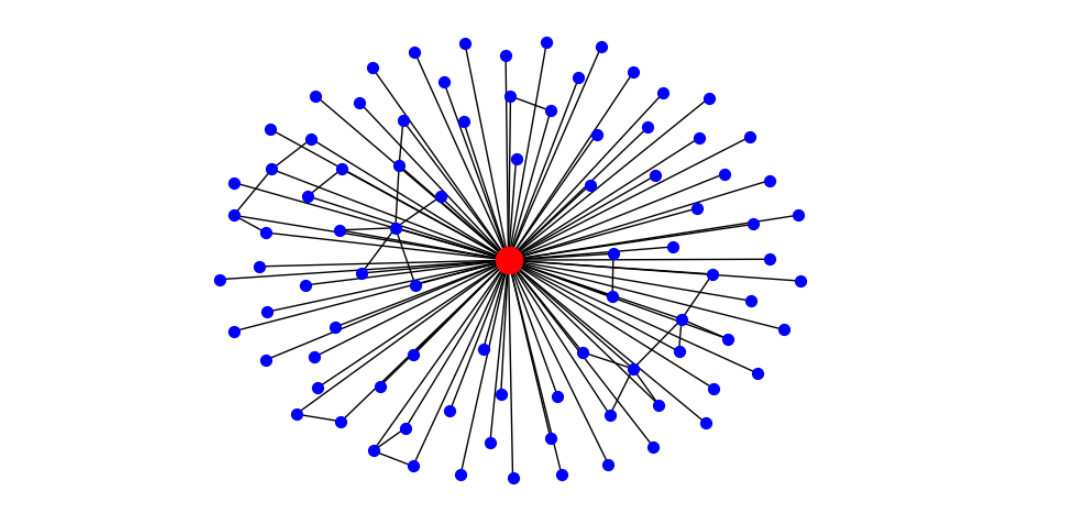

Ego Graph algorithm (source: NetworkX.org)

Nvidia has expanded its support of NetworkX graph analytic algorithms in RAPIDS, its open source library for accelerated computing. The expansion means data scientists can run 40-plus NetworkX algorithms on Nvidia GPUs without changing the Python code, potentially reducing processing time by hours on tough graph problems.

Initially released by Los Alamos National Laboratory 19 years ago, NetworkX is an open source collection of Python-based algorithms “for the creation, manipulation, and study of the structure, dynamics, and functions of complex networks,” as the NetworkX website explains. It’s particularly good at tackling large scale graph problems, such as those encompassing 10 million or more nodes and 100 million or more edges.

Nvidia has been supporting NetworkX in RAPIDS since November, when it held its AI and Data Science Summit. The company started out only supporting three NetworkX algorithms at that time, which limited the usefulness of the library. This month, the company is opening up its support by bringing more than 40 NetworkX algorithms into the RAPIDS fold.

Nvidia customers who need the processing scale of GPUs to solve their graph problems will appreciate the addition of the algorithms into RAPIDS, says Nick Becker, a senior technical product manager with Nvidia.

‘We’ve been working with the NetworkX community [and the core development team] for a while on making it possible to [have] zero code-change acceleration,” Becker tells Datanami. “Just configure NetworkX to use the Rapids backend, and keep your code the same. Just like we did with Pandas, you can keep your code the same. Tell your Python to use this separate backend, and it will fall back to the CPU.”

Graph algorithms can solve a certain class of connected network problems more efficiently than other computational approaches. By treating a given piece of data as a node that is connected (via edges) to other nodes, the algorithm can determine how connected or similar it is to other pieces of data (or nodes). Going out a single hop is akin to doing a SQL join on relational data. But going out three or more nodes becomes too computationally expensive to do using the standard SQL method, whereas the graph approach scales more linearly.

In the real world, graph problems naturally crop up in things like social media engagement, where a person (a node) can have many connections (or edges) to other people. Fraud detection is another classic graph analytic workload, with similar topologies appearing among groups of cybercriminals. With more NetworkX algorithms supported in RAPIDS, data scientists can bring the full power of GPU computing to bear on large-scale graph problems.

According to Becker, the RAPIDS graph expansion can reduce by hours the amount of time to calculate big graph problems on social media data.

“If I’m a data scientist, waiting two hours to calculate betweenness centrality, which is the name of an algorithm–that’s a problem, because I need to do this in an exploratory way. I need to iterate,” he says. “Unfortunately, that’s just not conducive to me doing that. But now I can take that same workflow with my Nvidia-powered laptop or workstation or cloud node, and go from a couple of hours to just a minute.”

But it’s not just the big graph problems, Becker says, as the combination of NetworkX, RAPIDS, and GPU-accelerated hardware can help solve smaller scale problems too.

“This is important even on a smaller scale,” he says. “I don’t need to be solving the world’s largest problem to be affected by the fact that my graph algorithm takes 30 minutes or an hour to run on just a medium-sized graph,” he says.

Betweeness centrality algorithm (Source: NetworkX.org)

NetworkX isn’t the first graph library Nvidia has supported with RAPIDS. The company has offered cuGraph on RAPIDS since it launched back in 2018, Becker says. CuGraph is still a valuable tool, particularly because it has advanced functions like multi-node and multi-GPU capability. “It’s got all sorts of goodies,” the Nvidia product manager says.

But the adoption of NetworkX is much broader, with about 40 million monthly downloads and users likely numbering over a million. Distributed under a BDS-new license, NetworkX is already an established part of the PyData ecosystem. That existing momentum means NetworkX support has the potential to significantly bolster adoption on RAPIDS for graph problems, Becker says.

“If you’re just using NetworkX, you’ve never had a good way to, without significant work, take your code and smoothly just use that on a GPU,” he says. “That’s what we’re really excited about, making it seamless.”

RAPIDS is a core component of Nvidia’s strategy for accelerating data science workloads. The library is largely focused on supporting the PyData ecosystem, via things like Pandas as well as Scikiet-learn and XG Boost for traditional machine learning. It also supports Apache Spark and Dask for large-scale computing challenges.

Nvidia recently added support for accelerating Spark machine learning (Spark ML) workloads to go along with its previous support for dataframes and SQL Spark workloads, Becker says. It also is giving customers’ generative AI (GenAI) initiatives a boost with RAPIDS RAFT, which supports Meta’s Face library for similar search and also Milvus, a vector database.

“RAPIDS’ goal, which we reiterated in the Data Science Summit…is that we want to meet data scientists where they are,” Becker says. “And that means providing capabilities where it’s possible to do it within these experiences in a seamless way. But sometimes it’s challenging to do that. It’s a work in progress, and we want to enable people to move quickly.”

RAPIDS will be one of the topics Nvidia covers at next months’ GPU Technology Conference (GTC), which is being held in person for the first time in five years. GTC takes place March 18 through 21 in San Jose, California. You can register at https://www.nvidia.com/gtc/.

Related Items:

Pandas on GPU Runs 150x Faster, Nvidia Says

Spark 3.0 to Get Native GPU Acceleration

NVIDIA CEO Hails New Data Science Facility As ‘Starship of The Mind’