Five Questions as the EU AI Act Goes Into Effect

(symbiot/Shutterstock)

The European Union’s Artificial Intelligence Act went into effect on August 1, becoming one of the first laws to implement broad regulation of AI applications. While all elements of the law are not yet being enforced, the new law is generating plenty of questions.

The AI Act, which was initially proposed to the European Commission in 2021, was formally approved by European Parliament in March of this year. Like the General Data Protection Regulation (GDPR) law that came before it, the AI Act harmonizes existing AI laws among members of the European Union, while introducing new legal frameworks governing use of AI.

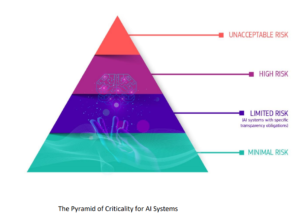

The law seeks to create a common regulatory framework for the use of AI that spans multiple fronts, including how AI applications are developed, what companies can use it for, and the consequences of breaking the law. Instead of regulating specific technology, the new law largely looks at AI through an application lens, and judges the various AI applications by the risks they entail.

At the bottom of the pyramid are AI uses with the lowest risk uses of AI, such as search engines. Systems with limited risks, such as chatbots, are also legal but must abide by certain transparency requirements. Companies to receive approval before adopting AI for uses with higher risks, such as credit scoring or school admission. Other use cases, such as public facial recognition systems, are considered unacceptably risk, and are banned by law. Organizations that fail to adhere to the requirements face fines ranging range from €7.5 million (about $8.1 million) or 1% of turnover all the way up to €35 million (about $38 million) or 7% of global revenue.

While it has been in effect for less than a week, there are many questions about the EU AI Act. Here are five of them:

1. Who will be impacted by the EU AI Act?

Like the GDPR, the AI Act’s impact will be felt far beyond the borders of Europe. The AI field is led by American firms, including companies like Google, Meta, OpenAI, Amazon, Apple, Anthropic, and others, and they are expected to feel an impact from the new law.

In particular, these firms will need to be extra cautious in their data collection regimens to ensure that they don’t inadvertently snap up European’s data for training purposes. Some of the firms may also cease distributing their AI models in Europe, as Facebook parent Meta has done with Llama.

2. How does the AI Act impact GenAI?

Because the EU Act regulates artificial intelligence based on the AI use case rather than specific technologies, there are no regulations specifically for generative AI. That’s good news for companies looking to take advantage of large language models (LLMs) and other foundation models.

However, that doesn’t mean that companies are absolved of all responsibility when rolling out GenAI applications, such as chatbots and AI assistants. Chatbots are in the “limited risk” category, which means companies are free to implement them, but they still must abide by certain requirements, such as disclosing how the models are trained, ensuring that the models are tested regularly, and don’t share private data.

3. Will the new law improve people’s lives?

European lawmakers hope the new law will prevent harm to people’s lives via by AI. But AI can also improve people’s lives. There has to be a balance, but have the Europeans found it? Guru Sethupathy, the co-founder and CEO of AI compliance firm FairNow, uses a comparison with another transformative technology–the motor vehicle–to explain the impact.

“When automobiles first came out, there were no sensors, dashboards, rearview mirrors, or check engine lights. People initially drove so slowly out of safety concerns that there was some thought that adoption would not take off. Over time, a regulatory apparatus (driver’s license, speed limits, and law enforcement) combined with safety technology (seatbelts, airbags, and child safety locks) built trust to such an extent that we now drive 70mph without concerns. Widespread automobile adoption required bridging the ‘trust gap,’” he tells Datanami.

“Similarly, for AI to be impactful and meaningfully adopted, we need to build trust in AI systems, which will require a combination of smart regulations and technology,” he continues. “New regulations like the EU AI Act and Colorado AI Act SB 205 are designed to add essential safety features to the rapidly evolving AI industry. Just as dashboards provide vital information and seatbelts protect drivers and passengers, these regulations safeguard businesses and consumers. They compel AI developers to implement critical protections against bias and unfairness, guiding the industry toward more responsible and ethical AI solutions. These legislative measures build a safer AI infrastructure that supports faster, more reliable innovation in the long run.”

4. Will it hurt innovation?

Anytime something is regulated, it risks stifling innovation. However, as the history of the car shows, effective regulation can also spur adoption, thereby driving innovation. The big question with the AI Act is which way it will go? According to Denas Grybauskas, the head of legal at Oxylabs, the answer is…not clear.

“As the AI Act comes into force, the main business challenges will be uncertainty in its first years,” Grybauskas says. “Various institutions, including the AI office, courts, and other regulatory bodies, will need time to adjust their positions and interpret the letter of the law. During this period, businesses will have to operate in a partial unknown, lacking clear answers if the compliance measures, they put in place are solid enough.

“One business compliance risk that is not being discussed lies in the fact that the AI Act will affect not only firms that directly deal with AI technologies but the wider tech community as well,” he continues. “Currently, the AI Act lays down explicit requirements and limitations that target providers (i.e., developers), deployers (i.e., users), importers, and distributors of artificial intelligence systems and applications. However, some of these provisions might also bring indirect liability to the third parties participating in the AI supply chain, such as data collection companies.”

That is the possibility of unintended consequences with any major new regulation, of course, and the AI Act is no different. The good news is that enforcement of the AI Act is being rolled out over the course of 36 months, so hopefully the regulators can be responsive to negative consequences.

5. How will it impact American regulation?

If GDPR is any guide, the AI Act will spur a lot of discussion, but not a whole lot of action, at the federal level, anyway. At the state level, GDPR helped usher in multiple laws, including the CCPA in California (since replaced by the CPRA), among others.

The same pattern should play out here, says Eric Loeb, executive vice president of government affairs at Salesforce, who told CNBC that the AI Act’s is a model for regulation, and that “other governments should consider these rules of the road when crafting their own policy frameworks.”

Related Items:

EU Votes AI Act Into Law, with Enforcement Starting By End of 2024

European Policymakers Approve Rules for AI Act