Tag: MoE

Snowflake Touts Speed, Efficiency of New ‘Arctic’ LLM

Snowflake today took the wraps off Arctic, a new large language model (LLM) that is available under an Apache 2.0 license. The company says Arctic’s unique mixture-of-experts (MoE) architecture, combined with its relat Read more…

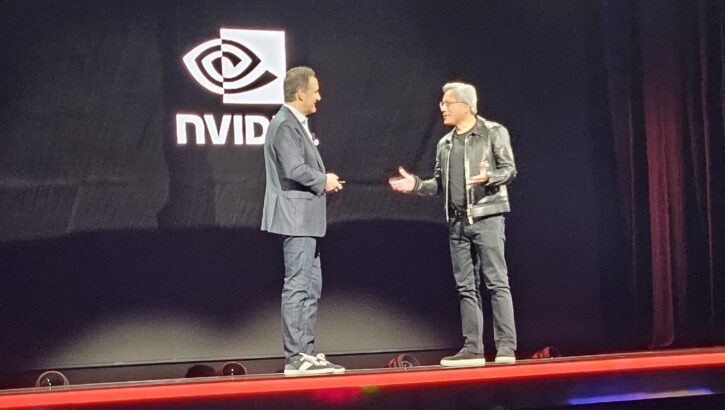

AWS Teases 65 Exaflop ‘Ultra-Cluster’ with Nvidia, Launches New Chips

AWS yesterday unveiled new EC2 instances geared toward tackling some of the fastest growing workloads, including AI training and big data analytics. During his re:Invent keynote, CEO Adam Selipsky also welcomed Nvidia fo Read more…